Conventional Ad Testing Is Dead, Long-Live AI Testing

Ad Testing Reviewed

Over the past decade, ad testing has, for the most part, been a simple A/B test. Change messaging or URLs and see which ad performs best through a 50/50 split serve. Then after enough statistical significance, see which ad performed best based on the CTR and other KPI metrics like conversions, conversion rate, and so forth.

Now, with the continued implementation by Google, Facebook, and other platforms, A/B testing has become and is becoming a thing of the past. No longer can we run a simple split test. The new AdWords recommendation is 5-7 ads per ad group and let the Machine tell us which ad performs best by which one is serving more often due to the performance indicators.

First, am I saddened to see simple A/B testing become a thing of the past? Of course! But, I am also excited for this next step in account management, strategy and tactical optimizations. In the end, it means I have the opportunity to focus less on my gut and feelings and more on the specific callout the machine is making and take that to the client.

So, today, let’s talk through a scenario and the new approach to how we can test ads with ad optimization being done for us.

The scenario

With Google now optimizing ad copy based on the algorithm, using the KPIs and quality/relevancy, conversion performance, and other factors, it has become more difficult to do split ad testing, as AI and Machine Learning take over. Obviously, an ad experiment or ad variations test under Drafts and experiments can be done, but if you want to start letting Google help determine ad performance, start looking at how Google serves the ads and adjust based on that.

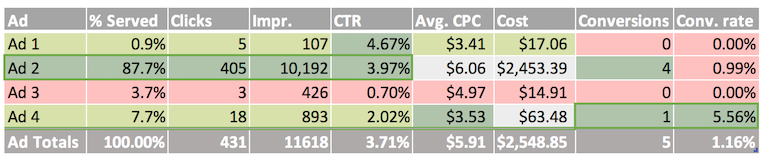

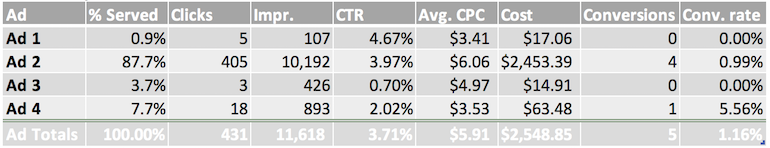

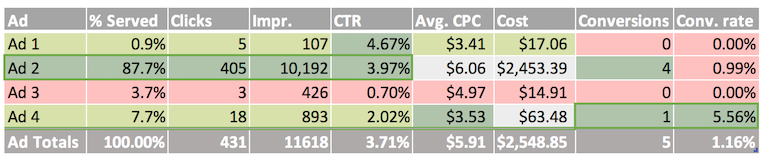

In this scenario, we are looking at an ad group with 4 ads. Ad #1 is serving 0.9% of the time, Ad # 2, 87.7%; Ad #3 – 7.7%; Ad #4, 7.7%.

With this information, we can start to break down why Google has started to show ad #2 most often, followed by Ad 4. Then, make decisions for Ad #1 and #3 for ad testing.

By breaking down the data, we can see that Ad #2 had the second highest CTR and the most conversions. Google was looking at this as a relevant ad based on CTR, and conversions, but wasn’t necessarily concerned on the conversion rate. Ad #4, which was shown second most, but still at a low percentage still had a 2% CTR with the second lowest CPC with only 1 conversion but had a really high conversion rate of 5.56%.

Based on Google’s preferences, we can actually take into consideration all the KPIs and adjust based on what the machine is telling us through the serve percentage.

The new approach can be done multiple ways, and I know my reasoning may not be exactly the next AMs direct choice, but it all comes back to testing and creating new strategies and implementable ideas to drive results.

The new approach

With that being said, I believe we can all agree that Ad #3 should be the paused ad, as it has the lowest CTR, second highest CPC and no conversions. This ad will be replaced by a new ad updated based on the ads being kept.

The next ad I would update would be Ad #2. Now, at first, this would seem like it goes against the entire philosophy of AI testing, but I actually don’t believe so. The reason being, Ad #2 was favored by Google, heavily, meaning the ad relevancy was high, good click through rate, etc., but the conversion rate was low. As an account manager, conversion rate gives me a lot of information, as well, from the human side. In this instance, I would only change the landing page and see how this ad copy then performs against Ad #1, #4 and a new ad #3 that is based off of pieces from all 3 of the other ads.

Conclusion

Through this, we’ve been able to decipher that Ad #2 is a really good ad just by looking at Google preference, front-end metrics, and overall conversions (due to the amount it was served) but needs help with the landing page. So, pausing this ad and testing a new landing page will give the other ads a fair swing in the optimized rotation by having data behind them. This should push the system towards these, now, better performing ads with the most data.

So, with all of that, the moral of the story is that, even though this method isn’t ground-breaking and there are a lot of ways to analyze ad copy, do not fret that Google is slowly taking away ad testing. Adapt to how we look at ad testing and use the information the machine is giving us to our advantage to make decisions.