This month I’d like to share a script I originally built 3 years ago to help with ad text optimization in AdWords. It’s intended to make sure all ad groups have enough experiments running, and can also be used to create new ads across many ad groups in bulk. It could also be used to clean up the worst performing ad in each ad group.

AdWords Script For Bulk Ad Creation

The main purpose of the script is to generate a bulk sheet for use with AdWords Bulk Uploads to implement new ads in the account. It can be used to make sure every ad group has, at least, a minimum number of ad tests running, or it can be used to simply add a specific number of new ads to all ad groups.

The power of the script is in that it knows how many ads exist in each ad group so it can automatically create the right number of lines for each ad group in the spreadsheet to help you get to the number of ad experiments you want. For example, if you want to always have at least 3 ad tests per ad group, the script will add 1 line for an ad group that already has 2 active ads and 2 lines for an ad group with only 1 active ad.

The script is also helpful because it populates the default ad text using your preferred criteria for determining the best ad. More on those criteria later.

While the intended functionality of the script is to help you create new ads in bulk, the beauty of scripts is that it’s not particularly difficult to tweak the functionality so that it could be used to help identify the worst ad in each ad group and remove that through a bulk upload.

How Ad Optimization Is Usually Done

This script helps with running ad text experiments so I thought it’d be useful to briefly cover the 3 main ways I see most advertisers go about optimizing their ad text:

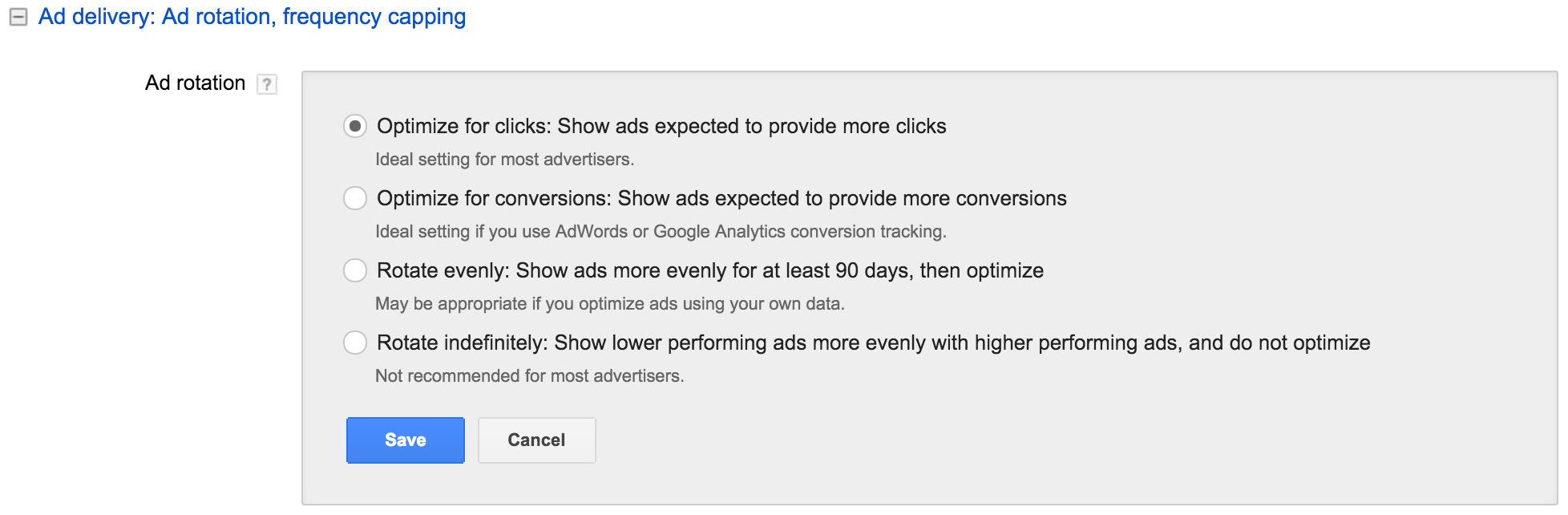

- Let Google handle it with optimized ad rotation

- Use a tool to help eliminate losing ad texts based on statistical analysis

- Do it manually

Here are my thoughts on each of these 3 techniques.

Google Optimized Ad Serving

Google makes money when users click on ads, so for as long as I can remember, they automatically gave preference to the ad with the highest CTR. When I was with Google working on the team that handled ad text optimization, a frequent request from advertisers was for a way to automatically give more exposure to the ad with the better conversion rate.

While that sounds good in theory, it’s trickier than it looks because the ad with the best conversion rate might not have a very good CTR so it might drive very few conversions because it gets few clicks, even though those clicks then convert at a great rate. It took Google a while, but now they allow advertisers to automatically show more of the ad that will drive more conversions.

There are some downsides to Google’s automated approach:

- It may seem like new ad tests end too early

- There is a false perception that ad testing is happening

Let me explain in more detail.

Tests Are Ended Too Early

When adding a new ad to the rotation, it may quickly see its “% Served” metric fall very low, even before it has just 100 impressions. To a marketer that may seem like an unreasonably quick judgment of the new ad. After all, maybe the ad would have done better on a different day of the week, at a different time of the day, in a certain region, or in another segment of all available queries.

While at Google, I often had to try and explain why this happened so I asked one of the product managers whether this could possibly be a bug and I was repeatedly assured that the system was working as intended and that it quickly had enough data to make a statistically valid decision. Google looks at far more than just the impressions the new ad gets and can compare the new ad to other ads and is able to draw conclusions very quickly. Again, Google makes money when people click on ads so they have a strong incentive to get this right and not waste too many impressions on underperforming ads.

While intuitively this seems wrong, the data behind it says it’s right. It’s simply a situation where we as advertisers have to trust that Google’s massive computing power is getting this one right.

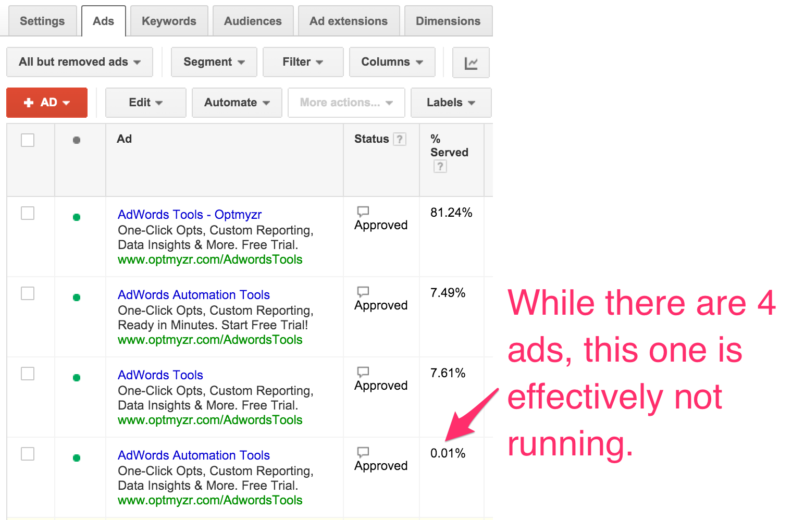

False Perception That Tests Are Happening

The other problem with letting Google handle ad optimization is that it may give advertisers a false perception that they are doing a better job than they actually are. When auditing an account, I usually look at whether there are multiple ads running in each ad group. I even like to check that there are at least 2 mobile preferred ads and 2 regular ads. When using Google optimized ad serving, there may be at least 2 ads per ad group, but it might be that one ad gets 99% of all the impressions, which effectively means there is no ad testing happening.

Checking for this condition is a bit more involved so many advertisers may miss it, and almost certainly a client who has trusted their account to an agency may overlook this detail.

Ideally, any ad that has been deemed a loser should be removed. It declutters the account and makes it clear it’s time for new ads to be created.

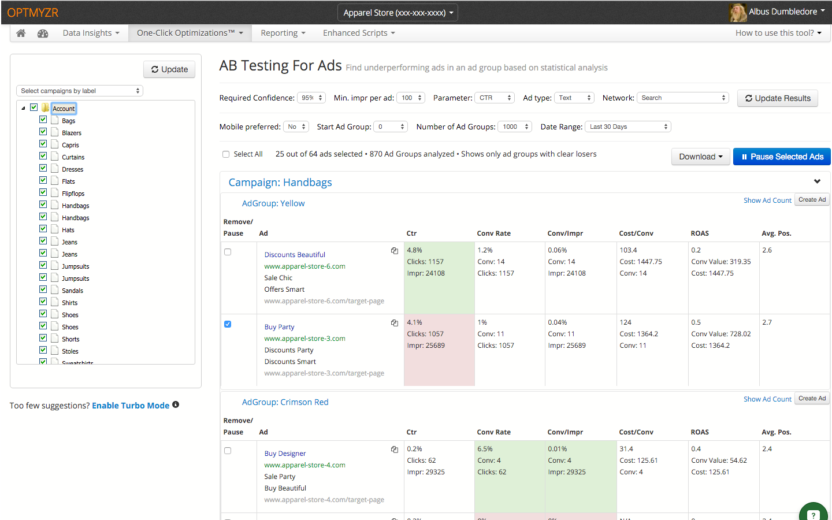

Ad Optimization Using Statistical Methods For A/B Tests

Some advertisers prefer more control over which ads get served more and will run their own experiments. Determining a statistically significant winner and loser is pretty straightforward using one of the many statistical significance calculators. The challenge is in doing this methodology across hundreds or thousands of ad groups.

It’s also important to consider which ratio to use to determine winners and losers. You could use CTR, like Google does by default, or you could use conversion rate or conversions per impression which combines the 2 previous metrics together. Personally, I think ads should be optimized for CTR, because the goal of the ad is to get a user to click and visit my site. Then it’s up to the landing page to convince that user to sign up and convert. If you find you don’t have enough control over landing pages, then you might want to optimize ads for a metric that includes conversions.

There’s a great script written by Russ Savage that can automate this process. I haven’t tested it recently so I’m not sure it still works given recent changes to AdWords Scripts, but the code is a good baseline for anyone who wants to automate ad testing in this manner.

My company Optmyzr includes a popular tool for A/B ad testing that works for text ads, image ads, and even dynamic search ads and is a great alternative for those who prefer not to mess with scripts.

Manual Ad Optimization

Finally, there are advertisers who neither want Google to optimize ad serving, and who don’t consider the best ad based on one of the ratios that can be used by statistical significance calculators. It could be that they prefer to keep ads based on lowest CPA, best ROI, or some other metric. These are the advertisers who may find my script useful to help make new ads based on what they consider the current best ad.

The script can identify the best or worst performing ad based on an AdWords metric or a calculated metric. For example, a calculated metric could be some combination of AdWords metrics, or it could be a metric that is calculated for each ad based on the aggregate data from its ad group, like the ‘percentage of total clicks’ the ad has received in its ad group.

The original purpose of the script was to help create new ads by writing the BEST performing ad from each ad group to a spreadsheet but you could just as easily reverse the logic and make it write the WORST ad to the spreadsheet. Then you could do a simple find-and-replace on the sheet to turn “add” into “remove” so that rather than making new ads, you’d remove the worst ad.

The Script To Bulk Create New Ads

Here is the script. Simply copy-and-paste it to your account and try it as-is or modify some of the settings first. Remember all this script does is generate a spreadsheet for use with bulk uploads so there’s very little risk in running this in your account but as always, run it in preview mode first just to be on the safe side.

To change the settings, change the values between lines 5 and 22. I’ve added some of the possible values you can use after the ‘//’ on each line. For the allowed date range settings, refer to the allowed values from the reporting specifications.

Script Settings

Here is a full rundown of what each of the settings does:

- currentSetting.enabledCampaignsOnly: set to 1 to include only enabled campaigns

- currentSetting.enabledAdGroupsOnly: set to 1 to include only enabled ad groups

- var adLabel: the name of the ad label for ads to consider. For example, if you want to make sure you have three weekend ads running and those are all labeled, ‘weekend’, then include this label here so that the script won’t do anything with your weekday ads

- currentSetting.calculation1: use this to pick the best ad using a custom formula, for example “?Ctr * ?ConversionRate”. Note that each metric should be preceded with a question mark so that the script will know to replace this with the actual value of that metric.

- currentSetting.keepHighestOrLowest: whether the ad with the highest or lowest value is considered the best one.

- currentSetting.sortBy: the metric by which to sort ads from best to worst. This could be any of the standard AdWords metrics like “Ctr”, the custom calculation “calculation1”, or one of the calculated ratios like “clickShare”, “impressionShare”, “conversionShare”, “convertedClicksShare” which count the percentage of that metric of the total for the ad group.

- currentSetting.showAllAds: set to 1 if you want all ads from an ad group to be rendered in the spreadsheet.

- currentSetting.devicePreference: to pick the device type preference. Allowed values are “notMobilePreferred”, “all”, “mobilePreferred”

- currentSetting.optimizationMethod: whether you’d like to “add n” new ads to all ad groups or ensure every ad group has “at least n” ad tests running (for the selected device preference and with the specified ad label).

- currentSetting.numAdsToKeep: the number of ads you’d like to be testing in every ad group, or the number of new ads you’re trying to add.

- currentSetting.time: the date range used to calculate the best performing ad, e.g. “LAST_30_DAYS”.

- currentSetting.email: who should get an email every time the script completes running.

- accountManagers: who should have access to the generated Google Spreadsheet. Comma separate for multiple email addresses, e.g. “example@example.com, example@example.com”.

- var campaignNameContains: if you only want to include campaigns whose name includes this text, e.g. “search”.

- var campaignLabel: if you only want to include campaigns that have a certain label.

- currentSetting.adImpressionThreshold: the minimum number of impressions before an ad may be considered to be the best.

- currentSetting.showAdLabels: set to 1 to include the ad’s labels in the spreadsheet.

- currentSetting.showAdGroupLabels: set to 1 to include the ad group’s labels in the spreadsheet.

Conclusion

I hope you find this script useful to help with creating bulk ads in AdWords. As always, I hope you will consider editing this script to make it do exactly what you need, for example, to help remove the worst ads from your account. If you want to use this script but don’t want to deal with the complexity of editing the settings in the code, consider trying the Enhanced Script™ version which is part of the Optmyzr PPC tool suite (my company). It makes it easier to change the settings, works in MCC accounts, is maintained and updated to work even if Google makes changes to the APIs and has a few other small enhancements to improve the usability.